How to Use YOLO with ZED

Introduction #

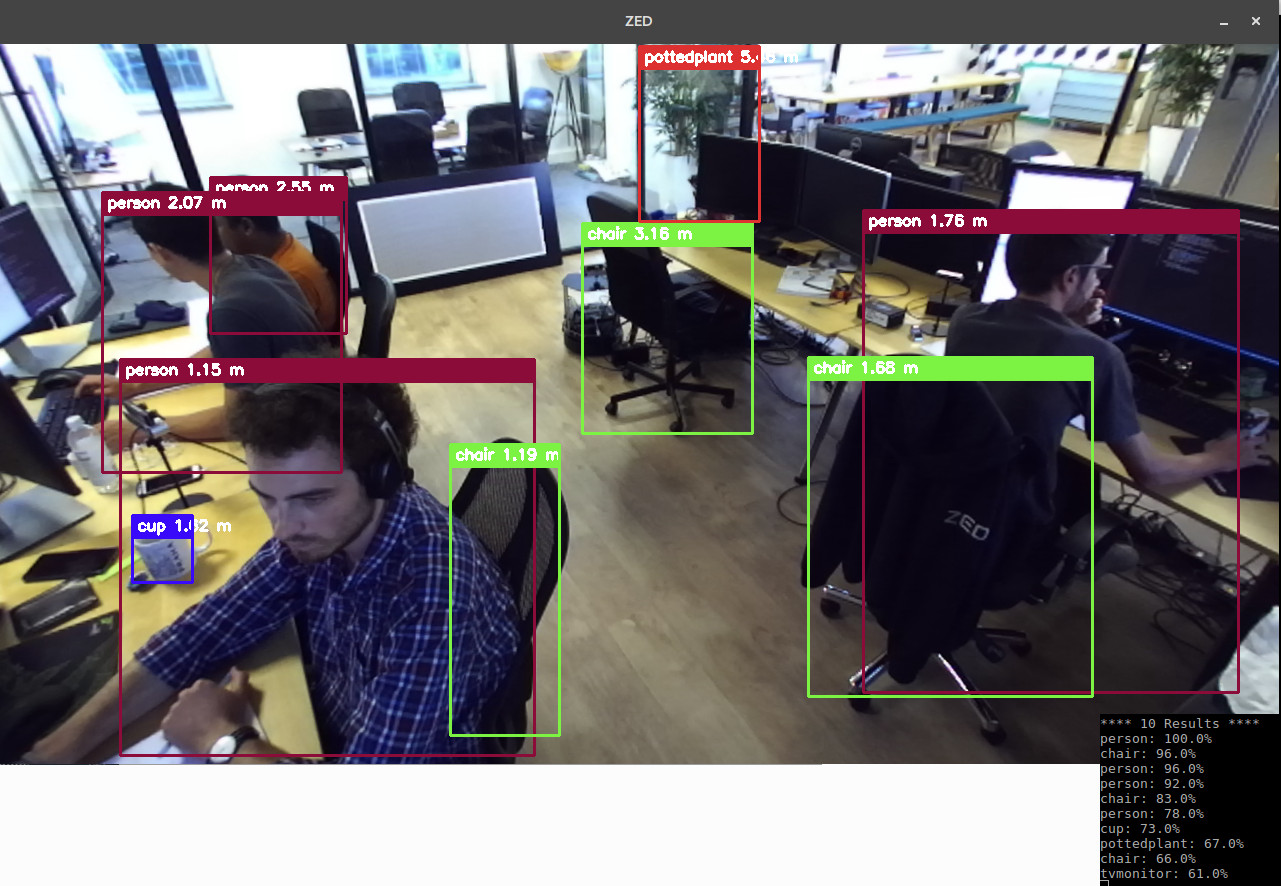

This package lets you use YOLO models, the deep learning framework for object detection, with the ZED stereo camera in Python or C++.

It enables the use of ZED 3D cameras with YOLO object detection, adding 3D localization and tracking to the most recent YOLO models.

These samples are designed to run state-of-the-art object detection models using the highly optimized TensorRT framework. Images are captured using the ZED SDK, and the 2D box detections are then used by the ZED SDK to extract 3D information (localization, 3D bounding boxes) and perform tracking.

The samples use a TensorRT-optimized ONNX model. They are currently compatible with YOLOv5, YOLOv6, YOLOv7, YOLOv9, YOLOv10, YOLOv11, and YOLOv12. If another architecture or future model uses the same output format as one of the supported models, it should also be compatible. The default model trained on the COCO dataset (80 classes) provided by the framework maintainers can be used.

A custom detector can also be trained using the same architecture. The following tutorials walk you through the process of training a custom detector:

- YOLOv6: https://github.com/meituan/YOLOv6/blob/main/docs/Train_custom_data.md

- YOLOv5: https://github.com/ultralytics/yolov5/wiki/Train-Custom-Data

Installation #

ZED YOLO depends on the following libraries:

- ZED SDK and [Python API]

- TensorRT (installed by the ZED SDK with the AI module)

- OpenCV

- CUDA

Workflow #

There are two main ways of running a YOLO ONNX model with the ZED and TensorRT:

[Recommended] Use the

OBJECT_DETECTION_MODEL::CUSTOM_YOLOLIKE_BOX_OBJECTSmode in the ZED SDK API to natively load a YOLO ONNX model. The inference code is fully optimized and internally uses TensorRT. The output is directly available as 2D and 3D tracked objects. This is the easiest and most optimized solution for supported models. ONNX to TensorRT engine generation (optimized model) is automatically handled by the ZED SDK.The model format is inferred from the output tensor size. If a future model uses a similar output format to the supported models, it should work.

[Advanced – for unsupported models] Use external code for TensorRT model inference, then ingest the detected boxes into the ZED SDK. This approach is for advanced users, as it requires maintaining the inference code. It is suitable for models not supported by the previously mentioned method.

Exporting the model to ONNX (mandatory step) #

Refer to the next page: YOLO ONNX Export to export your YOLO model to ONNX for compatibility. Depending on the version, there may be multiple export methods, and not all are compatible or optimized.

You can use the default model trained on the COCO dataset (80 classes) provided by the framework maintainers, or a custom-trained model.

Using the ONNX model #

Use the dedicated GitHub repository samples available at ZED YOLO GitHub to run the ONNX model with the ZED SDK.

More samples are also available at the ZED SDK GitHub.