Spatial Mapping Overview

Spatial mapping (also called 3D reconstruction) is the ability to create a 3D map of the environment. It allows a device to understand and interact with the real world. Spatial mapping is useful for collision avoidance, motion planning, and realistic blending of the real and virtual worlds.

Spatial Mapping #

Spatial mapping is the ability to capture a digital model of a scene or an object in the physical world. By merging the real world with the virtual world, it is possible to create convincing mixed reality experiences or robots that understand their environment.

The ZED continuously scans its environment to reconstruct a 3D map of the real-world. It refines its understanding of the world by combining new depth and position data over time. Spatial mapping is available either through the ZEDfu application or the ZED SDK.

How It Works #

The ZED continuously scans its surroundings and creates a 3D map of what it sees. It updates this map as the device moves around and captures new elements in the scene. Since the camera perceives distances beyond the range of traditional RGB-D sensors, it can quickly reconstruct 3D maps of large indoor and outdoor areas.

The SDK saves mapping data relative to a fixed reference coordinate frame, known as the World Frame. If Area Memory is enabled and an Area file is provided during initialization, the map can be loaded repeatedly across sessions and will always appear in the same physical location.

Capturing a Spatial Map #

Spatial mapping represents real-world geometry as a single spatial map. This map can be extracted continuously as it gets updated, or just once after mapping an entire area. A spatial map can either be a Mesh or a Fused Point Cloud (see Mapping Type).

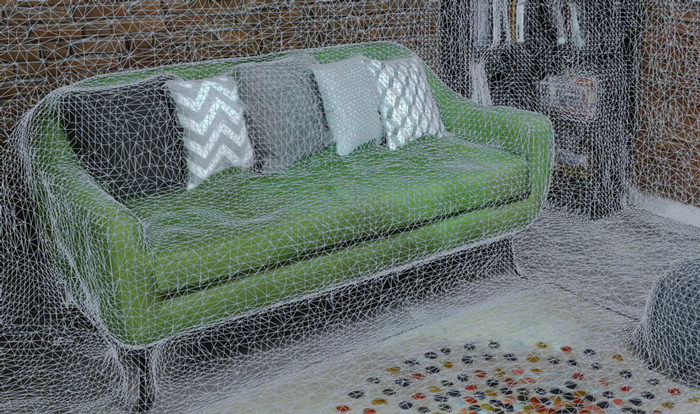

Mesh #

A mesh represents the geometry of the scene by surface. It is a set of watertight triangles defined by vertices and faces. This surface can be filtered and textured after scanning.

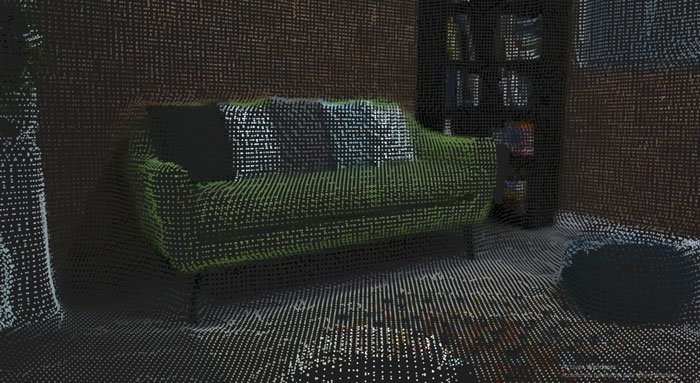

Fused Point Cloud #

A point cloud represents the geometry of the scene by a set of colored 3D points.

Spatial Mapping Parameters #

Spatial mapping resolution and range can be adjusted at initialization for both spatial map types. If scanning a mesh, you can also specify if you want it to be textured (off by default). To learn more, see the Using the API page.

Mapping Resolution #

Controls the level of detail of the spatial map. A higher resolution will provide a more detailed spatial map. Resolution can be set between 1cm and 12cm. Capturing maps with high density requires more memory and resources. Therefore, you should use the lowest density possible for your application.

Mapping Range #

Controls the range of the depth data used to build the spatial map. Increasing the mapping range allows the SDK to capture larger volumes quickly but at the cost of accuracy. The range can be set between 1m to 12m. Reducing the range will improve spatial mapping performance.

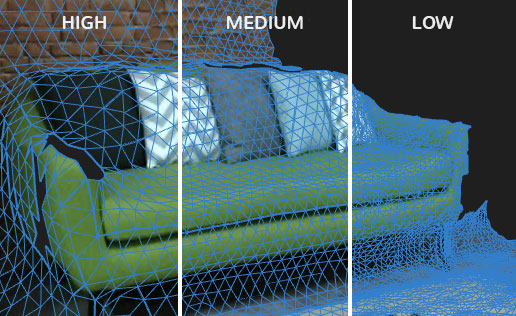

Mesh Filtering #

Filtering is often desirable to reduce the number of polygons per mesh after capture to improve performance when the mesh is used. Mesh Filtering lets you decimate and optimize the 3D models to reduce polygon count while preserving desirable geometric features.

Three presets are available: HIGH, MEDIUM and LOW. The LOW filtering mode simply fills holes and cleans mesh outliers, while the two other modes perform mesh decimation. Try the different presets to select the one that best suits your application.

Mesh Texturing #

The SDK can map the 2D images captured during spatial mapping onto the 3D model surface, resulting in a textured mesh.

To create the texture, the SDK records a subset of the left camera images during mapping. Each image is processed and assembled into a single texture map. This texture map is then projected onto each face of the 3D mesh using UV coordinates that the SDK generates automatically.