ROS 2 - ZED Stereo Node

The easiest way to start a ZED ROS 2 node for a stereo camera is by using the command line:

ros2 launch zed_wrapper zed_camera.launch.py camera_model:=<camera model>

where <camera model> must be replaced with one in the list zed, zedm, zed2, zed2i, zedx, zedxm, and virtual.

Published Topics #

📌 Note: Not all the topics are advertised by default. The version v5.1.0 of the ROS 2 Wrapper introduces new parameters to enable/disable the publishing of each topic. Please refer to the “Configuration parameters” section for more details.

The ZED node publishes data to the following topics:

Status

~/status/health: the general status of the node (custom message).~/status/heartbeat: heartbeat signal to monitor if the node is alive from other nodes (custom message).

Image streams

The image topic names are created using the following naming convention:

~/<sensor_type>/<color_model>/<rect_type>/image📌 Note: Starting from version 5.1.0 of the ROS 2 Wrapper the new convention replaces the previous formats such as

~/<sensor_type>/image_<rect_type>_<color_model>and~/<sensor_type>_<rect_type>/image_<rect_type>_<color_model>(e.g.,~/left/image_rect_color,~/rgb_raw/image_raw_gray). Please update your launch files and scripts to use the new topic names.RGB channel

~/rgb/color/rect/image: Color rectified image.~/rgb/color/rect/camera_info: Color camera calibration data.~/rgb/gray/rect/image: Grayscale rectified image.~/rgb/gray/rect/camera_info: Grayscale camera calibration data.~/rgb/color/raw/image: Color unrectified image.~/rgb/color/raw/camera_info: Color raw calibration data.~/rgb/gray/raw/image: Grayscale unrectified image.~/rgb/gray/raw/camera_info: Grayscale raw calibration data.

Left channel

~/left/color/rect/image: Left sensor RGB rectified image.~/left/color/rect/camera_info: Left sensor RGB camera calibration data.~/left/gray/rect/image: Left sensor Grayscale rectified image.~/left/gray/rect/camera_info: Left sensor Grayscale camera calibration data.~/left/color/raw/image: Left sensor RGB unrectified image.~/left/color/raw/camera_info: Left sensor RGB raw calibration data.~/left/gray/raw/image: Left sensor Grayscale unrectified image.~/left/gray/raw/camera_info: Left sensor Grayscale raw calibration data.

Right channel

~/right/color/rect/image: Right sensor RGB rectified image.~/right/color/rect/camera_info: Right sensor RGB camera calibration data.~/right/gray/rect/image: Right sensor Grayscale rectified image.~/right/gray/rect/camera_info: Right sensor Grayscale camera calibration data.~/right/color/raw/image: Right sensor RGB unrectified image.~/right/color/raw/camera_info: Right sensor RGB raw calibration data.~/right/gray/raw/image: Right sensor Grayscale unrectified image.~/right/gray/raw/camera_info: Right sensor Grayscale raw calibration data.

📌 Note: The

rgbandleftchannels of stereo cameras are identical. We use both names to differentiate the usage: whileleftandrightare used for stereo processing,rgbis used to be associated to the synchronizeddepthmap.

Stereo pair

~/stereo/color/rect/image: side-by-side left/right rectified stereo pair. Calibration data are available in the topics:~/left/color/rect/camera_infoand~/right/color/rect/camera_info.~/stereo/color/raw/image: side-by-side left/right unrectified stereo pair. Calibration data are available in the topics:~/left/color/raw/camera_infoand~/right/color/raw/camera_info.

Depth and point cloud

~/depth/camera_info: Depth camera calibration data.~/depth/depth_registered: Depth map image registered on left image.~/depth/depth_info: minimum and maximum depth information (custom message).~/point_cloud/cloud_registered: Registered color point cloud.~/confidence/confidence_map: Confidence image (doubleing point values).~/disparity/disparity_image: Disparity image.

Sensors data

~/left_cam_imu_transform: Transform from the left camera sensor to the IMU sensor.~/imu/data: Accelerometer, gyroscope, and orientation data in Earth frame.~/imu/data_raw: Accelerometer and gyroscope data in Earth frame.~/imu/mag: Raw magnetometer data (only ZED 2 and ZED 2i).~/atm_press: atmospheric pressure (only ZED 2 and ZED 2i).~/temperature/imu: temperature measured by the IMU sensor.~/temperature/left: temperature measured near the left CMOS sensor (only ZED 2 and ZED 2i).~/temperature/right: temperature measured near the right CMOS sensor (only ZED 2 and ZED 2i).

Positional tracking

~/pose: Absolute 3D position and orientation relative to the Map frame (Sensor Fusion algorithm + SLAM).~/pose/status: the status of the positional tracking module (custom message).~/pose_with_covariance: Camera pose referred to Map frame with covariance.~/odom: Absolute 3D position and orientation relative to the Odometry frame (pure visual odometry for ZED, visual-inertial odometry for ZED Mini).~/odom/status: the status of the odometry from the positional tracking module (custom message).~/path_map: Sequence of camera poses in Map frame.~/path_odom: Sequence of camera odometry poses in Map frame.

Geo Tracking

~/pose/filtered: the GNSS fused pose of the robot~/pose/filtered/status: the status of the GNSS fused pose (custom message).~/geo_pose/: the Latitude/Longitude pose of the robot~/geo_pose/status: the status of the Latitude/Longitude pose (custom message).

3D Mapping

~/mapping/fused_cloud: Fused point cloud created when theenable_mappingservice is called.

Object Detection

~/obj_det/objects: detected objects (custom message).

Body Tracking

~/body_trk/skeletons: detected body skeletons (custom message).

Plane Detection

~/plane: Detected plane (custom message).~/plane_marker: Detected plane object to be displayed on RVIZ 2.

📌 Note: Each topic has a common prefix created by the launch file as

~=/<namespace>/<node_name>/, where<namespace>is replaced with the value of the parametercamera_nameand<node_name>is the name of the node, e.g.zed_node.

Image Transport #

The ROS 2 wrapper supports the stack image_transport introduced with ROS 2 Crystal Clemmys.

All the image topics are published using the image_transport::Publisher object, correctly associating the sensor_msgs::msg::CameraInfo message to the relative sensor_msg::msg::Image message and creates the relative compressed image streams.

We recommend using the standard available image transports:

raw: uncompressed imagecompressed: JPEG or PNG compressed imagetheora: Ogg Theora compressed imagecompressedDepth: depth image compressed with a lossless algorithm

For proper association between images and their corresponding camera information, the ZED ROS 2 Wrapper also publishes camera_info topics for all compressed image streams:

~/rgb/color/rect/image/camera_info~/rgb/gray/rect/image/camera_info~/rgb/color/raw/image/camera_info~/rgb/gray/raw/image/camera_info

📌 Note: The image transport topics are not advertised if NITROS is enabled. Please refer to the dedicated section about NVIDIA® Isaac ROS Nitros for more details.

NVIDIA® Isaac ROS Nitros #

The ZED ROS 2 Wrapper supports the NVIDIA® Isaac™ ROS Nitros package for zero-copy transport of ROS messages using NVIDIA® GPUDirect® RDMA.

NVIDIA® Isaac ROS Nitros integration is enabled and used only if the required packages are manually installed. They are not added as a dependency because this is an optional feature.

For more information about the installation and usage of the NVIDIA® Isaac™ ROS packages, please refer to the dedicated section of the documentation: ZED Isaac™ ROS Integration Overview.

Point Cloud Transport #

The ROS 2 wrapper supports the stack pointcloud_transport introduced with ROS 2 Humble Hawksbill.

The point cloud transport is enabled and used only if the required packages are manually installed, they are not added as a dependency because this is an optional feature:

sudo apt install ros-${ROS_DISTRO}-point-cloud-transport \

ros-${ROS_DISTRO}-point-cloud-transport-plugins \

ros-${ROS_DISTRO}-zlib-point-cloud-transport \

ros-${ROS_DISTRO}-zstd-point-cloud-transport \

ros-${ROS_DISTRO}-draco-point-cloud-transport

Point cloud topics are published using the point_cloud_transport::Publisher object, which provides both standard raw and compressed point cloud streams for efficient data transmission.

QoS profiles #

All the topics are configured by default to use the following ROS 2 QoS profile:

- Reliability: RELIABLE

- History (Depth): KEEP_LAST (10)

- Durability: VOLATILE

- Lifespan: Infinite

- Deadline: Infinite

- Liveliness: AUTOMATIC

- Liveliness lease duration: Infinite

📌 Note: Read the official ROS 2 documentation about the QoS and the relatived settings for details.

The QoS settings can be modified by changing the relative parameters in the YAML files, as described in this official ROS 2 design document.

When creating a subscriber, be sure to use a compatible QOS profile according to the following tables:

Compatibility of QoS durability profiles:

| Publisher | Subscriber | Connection | Result |

|---|---|---|---|

| Volatile | Volatile | Yes | Volatile |

| Volatile | Transient local | No | - |

| Transient local | Volatile | Yes | Volatile |

| Transient local | Transient local | Yes | Transient local |

Compatibility of QoS reliability profiles:

| Publisher | Subscriber | Connection | Result |

|---|---|---|---|

| Best effort | Best effort | Yes | Best effort |

| Best effort | Reliable | No | - |

| Reliable | Best effort | Yes | Best effort |

| Reliable | Reliable | Yes | Reliable |

In order for a connection to be made, all of the policies that affect compatibility must be compatible. For instance, if a publisher-subscriber pair has compatible reliability QoS profiles, but incompatible durability QoS profiles, the connection will not be made.

📌 Note: for a detailed list of all the compatibility settings, please refer to the official ROS 2 documentation.

Configuration parameters #

Specify your launch parameters in the common_stereo.yaml, zed.yaml, zedm.yaml, zed2.yaml, zed2i.yaml, zedx.yaml, zedxm.yaml, and virtual files available in the folder zed_wrapper/config.

General parameters #

Namespace: general

common_stereo.yaml

| Parameter | Description | Value |

|---|---|---|

| camera_timeout_sec | Camera timeout (sec) if communication fails | int |

| camera_max_reconnect | Reconnection temptatives after timeout, before shutting down | int |

| camera_flip | Flip the camera data if it is mounted upsidedown | true, false |

| self_calib | Enable the self-calibration process at camera opening. See https://www.stereolabs.com/docs/api/structsl_1_1InitParameters.html#affeaa06cfc1d849e311e484ceb8edcc5 | true, false |

| serial_number | Select a ZED camera by its Serial Number | int |

| camera_id | Select a ZED camera by its ID (0 for first, 1 for second…) | int |

| pub_resolution | The resolution used for image and depth map publishing. ‘NATIVE’ to use the same general.grab_resolution - CUSTOM to apply the general.pub_downscale_factor downscale factory to reduce bandwidth in transmission | string,‘NATIVE’, ‘CUSTOM’ |

| pub_downscale_factor | Rescale factor used to rescale image before publishing when ‘pub_resolution’ is ‘CUSTOM’ or ‘OPTIMIZED’ | double |

| pub_frame_rate* | Set the video/depth publish rate | double |

| enable_image_validity_check | Sets the image validity check. If set to 1, the SDK will check if the frames are valid before processing | int |

| gpu_id | Select a GPU device for depth computation | int |

| optional_opencv_calibration_file | Optional path where the ZED SDK can find a file containing the calibration information of the camera computed by OpenCV. | string |

| async_image_retrieval | If set to true will camera image retrieve at a framerate different from \ref grab() application framerate. This is useful for recording SVO or sending camera stream at different rate than application | true, false |

| publish_status | Enable publishing of the node status topics | true, false |

* Dynamic parameter

zed.yaml, zedm.yaml, zed2.yaml, zed2i.yaml, zedx.yaml, zedxm.yaml, and virtual.yaml

| Parameter | Description | Value |

|---|---|---|

| camera_model | Type of Stereolabs camera | string, zed, zedm, zed2, zed2i, zedx, zedxm, virtual |

| camera_name | User name for the camera, can be different from camera model | string |

| grab_resolution | The native camera grab resolution. | string, ‘HD2K’, ‘HD1200’,‘HD1080’, ‘HD720’, ‘SVGA’, ‘VGA’, ‘AUTO’ |

| grab_frame_rate | ZED SDK internal grabbing rate | int, ‘15’,‘30’,‘60’,‘90’,‘100’,‘120’ |

SVO input parameters #

Namespace: svo

common_stereo.yaml

| Parameter | Description | Value |

|---|---|---|

| svo_path | Specify SVO filename | string, Note: relative file paths are not allowed |

| use_svo_timestamps | Use the SVO timestamps to publish data. If false, data will be published at the system time | true, false |

| publish_svo_clock | When use_svo_timestamps is true allows to publish the SVO clock to the /clock topic. This is useful for synchronous rosbag playback | true, false |

| svo_loop | Restart the SVO if the end of the file is reached | true, false |

| svo_realtime | if true SVO will be played trying to respect the original framerate eventually skipping frames, otherwise every frame will be processed respecting the pub_frame_rate setting | true, false |

| play_from_frame | Start playing the SVO from a specific frame ID | int |

| replay_rate | Replay rate for the SVO when not used in realtime mode | double, [0.10-5.0] |

Streaming input parameters #

Namespace: stream

common_stereo.yaml

| Parameter | Description | Value |

|---|---|---|

| stream_address | Address of the local streaming server | string |

| stream_port | Port of the local streaming server (only odd values are valid) | int |

Simulation input parameters #

Namespace: simulation

common_stereo.yaml

| Parameter | Description | Value |

|---|---|---|

| sim_enabled | Enable simulation input | true, false |

| sim_address | Address of the simulation server | string |

| sim_port | Port of the simulation server (only odd values are valid) | int |

Streaming server parameters #

Namespace: stream_server

common_stereo.yaml

| Parameter | Description | Value |

|---|---|---|

| stream_enabled | enable the streaming server when the camera is open | true, false |

| codec | ifferent encoding types for image streaming: ‘H264’, ‘H265’ | string |

| port | Port used for streaming. Port must be an even number. Any odd number will be rejected. | int |

| bitrate | Streaming bitrate (in Kbits/s) used for streaming. See doc | int, [1000 - 60000] |

| gop_size | The GOP size determines the maximum distance between IDR/I-frames. Very high GOP size will result in slightly more efficient compression, especially on static scenes. But latency will increase. | int, [max 256] |

| adaptative_bitrate | Bitrate will be adjusted depending the number of packet dropped during streaming. If activated, the bitrate can vary between [bitrate/4, bitrate]. | true, false |

| chunk_size | Stream buffers are divided into X number of chunks where each chunk is chunk_size bytes long. You can lower chunk_size value if network generates a lot of packet lost: this will generates more chunk for a single image, but each chunk sent will be lighter to avoid inside-chunk corruption. Increasing this value can decrease latency. | int, [1024 - 65000] |

| target_framerate | Framerate for the streaming output. This framerate must be below or equal to the camera framerate. Allowed framerates are 15, 30, 60 or 100 if possible. Any other values will be discarded and camera FPS will be taken. | int |

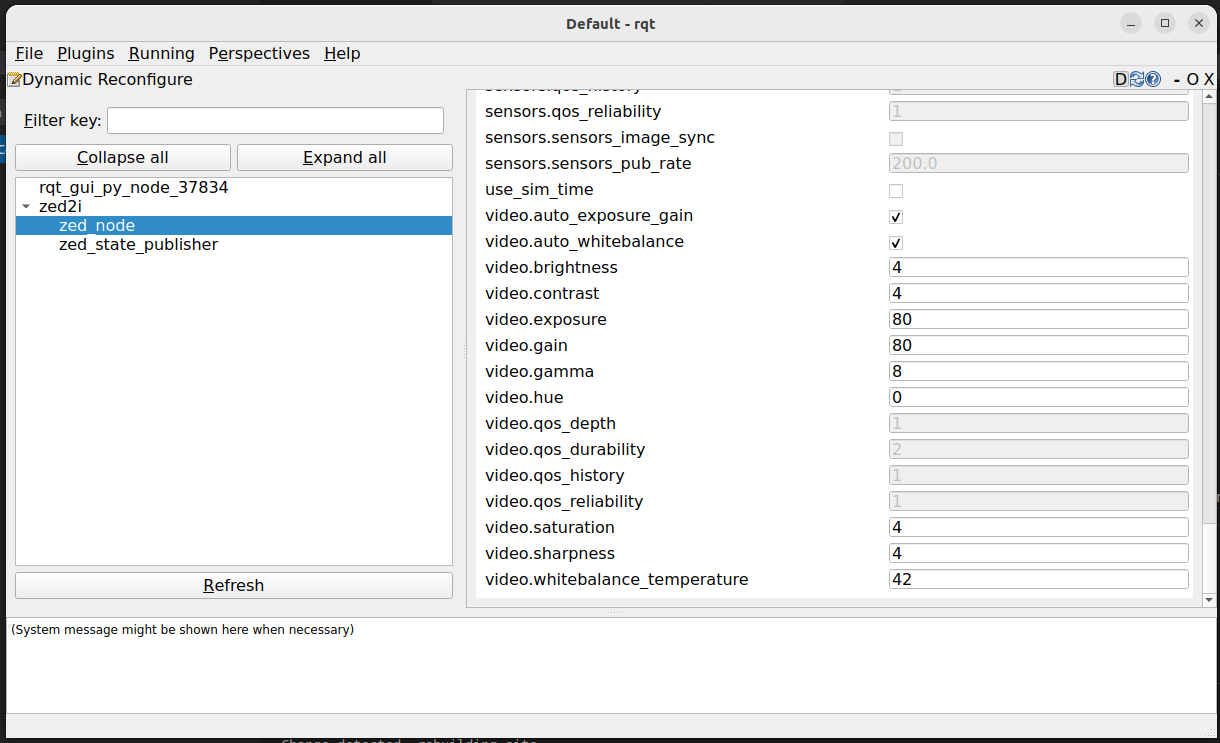

Video parameters #

Namespace: video

common_stereo.yaml

| Parameter | Description | Value |

|---|---|---|

| saturation* | Image saturation | int, [0,8] |

| sharpness* | Image sharpness | int, [0,8] |

| gamma* | Image gamma | int, [0,8] |

| auto_exposure_gain* | Enable the auto exposure and auto gain | true, false |

| exposure* | Exposure value if auto-exposure is false | int [0,100] |

| gain* | Gain value if auto-exposure is false | int [0,100] |

| auto_whitebalance* | Enable the auto white balance | true, false |

| whitebalance_temperature* | White balance temperature | int [28,65] |

| enable_24bit_output | Enable BGR 24-bit output for images (instead of 32-bit) | true, false |

| publish_rgb | Enable publishing of RGB image streams | true, false |

| publish_left_right | Enable publishing of Left and Right image streams | true, false |

| publish_raw | Enable publishing of raw (unrectified) image streams | true, false |

| publish_gray | Enable publishing of grayscale image streams | true, false |

| publish_stereo | Enable publishing of stereo pair image streams | true, false |

* Dynamic parameter

zed.yaml, zed2.yaml, aand zed2i.yaml

| Parameter | Description | Value |

|---|---|---|

| brightness* | Image brightness | int, [0,8] |

| contrast* | Image contrast | int, [0,8] |

| hue* | Image hue | int, [0,11] |

* Dynamic parameter

zedx.yaml, zedxm.yaml, and virtual.yaml

| Parameter | Description | Value |

|---|---|---|

| exposure_time* | Defines the real exposure time in microseconds. Recommended to control manual exposure (instead of video.exposure setting) | int, [28,30000] |

| auto_exposure_time_range_min* | Defines the minimum range of exposure auto control in micro seconds | int |

| auto_exposure_time_range_max* | Defines the maximum range of exposure auto control in micro seconds | int |

| exposure_compensation* | Defines the Exposure-target compensation made after auto exposure. Reduces the overall illumination target by factor of F-stops. | int, [0 - 100] |

| analog_gain* | Defines the real analog gain (sensor) in mDB. Recommended to control manual sensor gain (instead of video.gain setting) | int, [1000-16000]. |

| auto_analog_gain_range_min* | Defines the minimum range of sensor gain in automatic control | int |

| auto_analog_gain_range_max* | Defines the maximum range of sensor gain in automatic control | int |

| digital_gain* | Defines the real digital gain (ISP) as a factor. Recommended to control manual ISP gain (instead of video.gain setting) | int, [1-256] |

| auto_digital_gain_range_min* | Defines the minimum range of digital ISP gain in automatic control | int |

| auto_digital_gain_range_max* | Defines the maximum range of digital ISP gain in automatic control | int |

| denoising* | Defines the level of denoising applied on both left and right images | int, [0-100] |

* Dynamic parameter

Region of Interest parameters #

Namespace: region_of_interest

common_stereo.yaml

| Parameter | Description | Value |

|---|---|---|

| automatic_roi | Enable the automatic ROI generation to automatically detect part of the robot in the FoV and remove them from the processing. Note: if enabled the value of manual_polygon is ignored | true, false |

| depth_far_threshold_meters | Filtering how far object in the ROI should be considered, this is useful for a vehicle for instance | double |

| image_height_ratio_cutoff | By default consider only the lower half of the image, can be useful to filter out the sky | double |

| manual_polygon | A polygon defining the ROI where the ZED SDK perform the processing ignoring the rest. Coordinates must be normalized to ‘1.0’ to be resolution independent. | string |

| apply_to_depth | Apply ROI to depth processing | true, false |

| apply_to_positional_tracking | Apply ROI to positional tracking processing | true, false |

| apply_to_object_detection | Apply ROI to object detection processing | true, false |

| apply_to_body_tracking | Apply ROI to body tracking processing | true, false |

| apply_to_spatial_mapping | Apply ROI to spatial mapping processing | true, false |

| publish_roi_mask | Publish the ROI mask image | true, false |

Depth parameters #

Namespace: depth

common_stereo.yaml

| Parameter | Description | Value |

|---|---|---|

| depth_mode | Select depth map quality | string, ‘NEURAL_LIGHT’, ‘NEURAL’, ‘NEURAL_PLUS’ |

| depth_stabilization | Enable depth stabilization. Stabilizing the depth requires an additional computation load as it enables tracking | int, [0,100] |

| openni_depth_mode | Enable/disable the depth OpenNI format (16 bit, millimeters) | int, [0,1] |

| point_cloud_freq* | frequency of the pointcloud publishing (equal or less to grab_frame_rate value) | double |

| point_cloud_res | The resolution used for point cloud publishing - ‘COMPACT’-Standard resolution. Optimizes processing and bandwidth, ‘REDUCED’-Half resolution. Low processing and bandwidth requirements | string, ‘COMPACT’, ‘REDUCED’ |

| depth_confidence* | Depth confidence threshold (lower values = more filtering) | int [0,100] |

| depth_texture_conf* | Depth tectureconfidence threshold (lower values = more filtering) | int [0,100] |

| remove_saturated_areas* | If true color saturated areas are not included in the depth map | true, false |

| publish_depth_map | Enable publishing of depth map stream | true, false |

| publish_depth_info | Enable publishing of depth info stream | true, false |

| publish_point_cloud | Enable publishing of point cloud stream | true, false |

| publish_depth_confidence | Enable publishing of depth confidence map stream | true, false |

| publish_disparity | Enable publishing of disparity image stream | true, ` |

📌 Note: To disable depth calculation set

qualitytoNONE. All the other parameters relative to depth will be ignored and only the video streams and sensors data will be published.

* Dynamic parameter

zed.yaml, zedm.yaml, zed2.yaml, zed2i.yaml, zedx.yaml, zedxm.yaml, and virtual.yaml

| Parameter | Description | Value |

|---|---|---|

| min_depth | Minimum depth value (from the camera) that will be computed | double |

| max_depth | Maximum range allowed for depth. Values beyond this limit will be reported as TOO_FAR | double, ]0.0,20.0] |

* Dynamic parameter

Positional tracking parameters (Odometry and Localization) #

Namespace: pos_tracking

common_stereo.yaml

| Parameter | Description | Value |

|---|---|---|

| pos_tracking_enabled | Enable positional tracking when the node starts | true, false |

| pos_tracking_mode | Use GEN_1 for old behaviors, GEN_2 is deprecated; use GEN_3 for the new algorithms | string, ‘GEN_1’, ‘GEN_2’, ‘GEN_3’ |

| imu_fusion | Enable IMU fusion. When set to false, only the optical odometry will be used. | true, false |

| publish_tf | Enable publishing of transform odom -> base_link and all the other transforms | true, false |

| publish_map_tf | Enable publishing of transform map -> odom. Only valid if publish_tf is true | true, false |

| map_frame | Frame_id of the pose message | string |

| odometry_frame | Frame_id of the odom message | string |

| area_memory_db_path | The full path of the space memory DB | string |

| area_memory | Enable Loop Closing algorithm | true, false |

| reset_odom_with_loop_closure | Enable the automatic reset of the odometry when a loop closure event is detected. | true, false |

| depth_min_range | Set this value for removing fixed zones of the robot in the FoV of the camerafrom the visual odometry evaluation | double |

| set_as_static | If ’true’ the camera will be static and not move in the environment | true, false |

| set_gravity_as_origin | If ’true’ align the positional tracking world to imu gravity measurement. Keep the yaw from the user initial pose. | true, false |

| floor_alignment | Indicates if the floor must be used as origin for height measures | true, false |

| initial_base_pose | Initial reference pose. Useful if the base_link does not start from the origin | array |

| path_pub_rate | Frequency (Hz) of publishing of the path messages | double |

| path_max_count | Maximum number of poses kept in the pose arrays (-1 for infinite) | int |

| two_d_mode | Enable if robot moves on a planar surface. Forces Z to fixed_z_value, roll and pitch to zero | true, false |

| fixed_z_value | Fixed value assigned to Z coordinate if two_d_mode is true | double |

| transform_time_offset | The value added to the timestamp of map->odom and odom->base_link transform being generated | double |

| reset_pose_with_svo_loop | Reset the camera pose the initial_base_pose when the SVO loop is enabled and the SVO playback reaches the end of the file | true, false |

| publish_odom_pose | Enable publishing of odometry and pose messages | true, false |

| publish_pose_covariance | Enable publishing of pose_with_covariance message | true, false |

| publish_cam_path | Enable publishing of path_odom message | true, false |

Global Localization (GNSS Fusion) #

Namespace: gnss_fusion

common_stereo.yaml

| Parameter | Description | Value |

|---|---|---|

| gnss_fusion_enabled | Enable the fusion of ‘sensor_msg/NavSatFix’ message information into pose data | true, false |

| gnss_fix_topic | Name of the GNSS topic of type NavSatFix to subscribe [Default: “/gps/fix”] | string |

| gnss_zero_altitude | Set to true to ignore GNSS altitude information | true, false |

| h_covariance_mul | Multiplier factor to be applied to horizontal covariance of the received fix (plane X/Y) | double |

| v_covariance_mul | Multiplier factor to be applied to vertical covariance of the received fix (Z axis) | double |

| gnss_frame | The TF frame of the GNSS sensor [Default: “gnss_link”] | string |

| publish_utm_tf | Publish utm -> map TF | true, false |

| broadcast_utm_transform_as_parent_frame | If ’true’ publish utm -> map TF, otherwise map -> utm | true, false |

| enable_reinitialization | Determines whether reinitialization should be performed between GNSS and VIO fusion when a significant disparity is detected between GNSS data and the current fusion data. It becomes particularly crucial during prolonged GNSS signal loss scenarios. | true, false |

| enable_rolling_calibration | If this parameter is set to true, the fusion algorithm will used a rough VIO / GNSS calibration at first and then refine it. This allow you to quickly get a fused position | true, false |

| enable_translation_uncertainty_target | When this parameter is enabled (set to true), the calibration process between GNSS and VIO accounts for the uncertainty in the determined translation, thereby facilitating the calibration termination. The maximum allowable uncertainty is controlled by the ’target_translation_uncertainty’ parameter | true, false |

| gnss_vio_reinit_threshold | Determines the threshold for GNSS/VIO reinitialization. If the fused position deviates beyond out of the region defined by the product of the GNSS covariance and the gnss_vio_reinit_threshold, a reinitialization will be triggered | double |

| target_translation_uncertainty | Defines the target translation uncertainty at which the calibration process between GNSS and VIO concludes. By default, the threshold is set at 10 centimeters. | double |

| target_yaw_uncertainty | Defines the target yaw uncertainty at which the calibration process between GNSS and VIO concludes. The unit of this parameter is in radian. By default, the threshold is set at 0.1 radians | double |

Mapping parameters #

Namespace: mapping

common_stereo.yaml

| Parameter | Description | Value |

|---|---|---|

| mapping_enabled | Enable/disable the mapping module | true, false |

| resolution | Resolution of the fused point cloud [0.01, 0.2] | double, default=0.1 |

| max_mapping_range | Maximum depth range while mapping in meters (-1 for automatic calculation) [2.0, 20.0] | double |

| fused_pointcloud_freq | Publishing frequency (Hz) of the 3D map as fused point cloud | double |

| clicked_point_topic | Topic published by Rviz when a point of the cloud is clicked. Used for plane detection | string, default "/clicked_point" |

| pd_max_distance_threshold | Plane detection: controls the spread of plane by checking the position difference | double |

| pd_normal_similarity_threshold | Plane detection: controls the spread of plane by checking the angle difference | double |

| publish_det_plane | Enable publishing of the detected planes | true, false |

Sensors parameters #

Namespace: sensors

common_stereo.yaml

| Parameter | Description | Value |

|---|---|---|

| publish_imu_tf | Enable publishing of transform imu_link -> left_camera_frame. | true, false |

| sensors_image_sync | Synchronize Sensors messages with latest published video/depth message | true, false |

| sensors_pub_rate | Frequency of publishing of sensors data. MAX: 400. - MIN: grab rate | double |

| publish_imu | Enable publishing of IMU data | true, false |

| publish_imu_raw | Enable publishing of raw IMU data | true, false |

| publish_cam_imu_transf | Enable publishing of transform between camera and IMU frames | true, false |

| publish_mag | Enable publishing of Magnetometer data | true, false |

| publish_baro | Enable publishing of Barometer data | true, false |

| publish_temp | Enable publishing of Temperature data | true, false |

Object detection parameters #

Namespace: object_detection

common_stereo.yaml

| Parameter | Description | Value |

|---|---|---|

| od_enabled | Automatically enables Object Detection when the node starts | true, false |

| enable_tracking | Whether the object detection system includes object tracking capabilities across a sequence of images | true, false |

| model | Detection model | string, ‘MULTI_CLASS_BOX_FAST’, ‘MULTI_CLASS_BOX_MEDIUM’, ‘MULTI_CLASS_BOX_ACCURATE’, ‘PERSON_HEAD_BOX_FAST’, ‘PERSON_HEAD_BOX_ACCURATE’, ‘CUSTOM_YOLOLIKE_BOX_OBJECTS’ |

| allow_reduced_precision_inference | Allow inference to run at a lower precision to improve runtime and memory usage | true, false |

| max_range | Defines a upper depth range for detections [m] | double |

| prediction_timeout | During this time [sec], the object will have OK state even if it is not detected. Set this parameter to 0 to disable SDK predictions | double |

| filtering_mode | Defines the filtering mode that should be applied to raw detections. | ‘0’: NONE - ‘1’: NMS3D - ‘2’: NMS3D_PER_CLASS |

| allow_reduced_precision_inference | Allow inference to run at a lower precision to improve runtime and memory usage | true, false |

object_detection.yaml

| Parameter | Description | Value |

|---|---|---|

| class.people.enabled* | Enable detection of people | true, false |

| class.people.confidence_threshold* | Minimum value of the detection confidence of people | double, [0,99] |

| class.vehicles.enabled* | Enable detection of vehicles | true, false |

| class.vehicles.confidence_threshold* | Minimum value of the detection confidence of vehicles | double, [0,99] |

| class.bags.enabled* | Enable detection of bags | true, false |

| class.bags.confidence_threshold* | Minimum value of the detection confidence of bags | double, [0,99] |

| class.animal.enabled* | Enable detection of animals | true, false |

| class.animal.confidence_threshold* | Minimum value of the detection confidence of animals | double, [0,99] |

| class.electronics.enabled* | Enable detection of electronics | true, false |

| class.electronics.confidence_threshold* | Minimum value of the detection confidence of electronics | double, [0,99] |

| class.fruit_vegetable.enabled* | Enable detection of fruit and vegetables | true, false |

| class.fruit_vegetable.confidence_threshold* | Minimum value of the detection confidence of fruit and vegetables | double, [0,99] |

| class.sports.enabled* | Enable detection of sports | true, false |

| class.sports.confidence_threshold* | Minimum value of the detection confidence of sports | double, [0,99] |

* Dynamic parameter

custom_object_detection.yaml

| Parameter | Description | Value |

|---|---|---|

| object_detection.custom_onnx_file | Path to the YOLO-like ONNX file for custom object detection directly performed by the ZED SDK | string |

| object_detection.custom_onnx_input_size | Resolution used with the YOLO-like ONNX file. For example, 512 means a input tensor ‘1x3x512x512’ | int |

| custom_class_count | Number of classes in the custom ONNX file. For example, 80 for YOLOv8 trained on COCO dataset | int |

FOR EACH CLASS CREATE THE FOLLOWING PARAMETERS

| Parameter | Description | Value |

|---|---|---|

| object_detection.class_XXX.label | Label of the custom class. The label must be unique and not used by other classes | string |

| object_detection.class_XXX.model_class_id | Class ID of the object in the custom ONNX file (it is not required that this value matches the value in the ‘class_XXX’ identifier) | int |

| object_detection.class_XXX.enabled* | Enable/disable the detection of this class | true, false |

| object_detection.class_XXX.confidence_threshold* | Minimum value of the detection confidence of an object [0,99] | int |

| object_detection.class_XXX.is_grounded | Provide hypothesis about the object movements (degrees of freedom or DoF) to improve the object tracking | true, false |

| object_detection.class_XXX.is_static* | Provide hypothesis about the object staticity to improve the object tracking | true, false |

| object_detection.class_XXX.tracking_timeout* | Maximum tracking time threshold (in seconds) before dropping the tracked object when unseen for this amount of time | double |

| object_detection.class_XXX.tracking_max_dist* | Maximum tracking distance threshold (in meters) before dropping the tracked object when unseen for this amount of meters. Only valid for static object | double |

| object_detection.class_XXX.max_box_width_normalized* | Maximum allowed width normalized to the image size | double |

| object_detection.class_XXX.min_box_width_normalized* | Minimum allowed width normalized to the image size | double |

| object_detection.class_XXX.max_box_height_normalized* | Maximum allowed height normalized to the image size | double |

| object_detection.class_XXX.min_box_height_normalized* | Minimum allowed height normalized to the image size | double |

| object_detection.class_XXX.max_box_width_meters* | Maximum allowed 3D width | double |

| object_detection.class_XXX.min_box_width_meters* | Minimum allowed 3D width | double |

| object_detection.class_XXX.max_box_height_meters* | Maximum allowed 3D height | double |

| object_detection.class_XXX.min_box_height_meters* | Minimum allowed 3D height | double |

| object_detection.class_XXX.object_acceleration_preset* | Object acceleration preset | string, ‘DEFAULT’, ‘LOW’, ‘MEDIUM’, ‘HIGH’ |

| object_detection.class_XXX.max_allowed_acceleration* | If set with a different value from the default [100000], this value takes precedence over the selected preset, allowing for a custom maximum acceleration. Unit is m/s^2 | double |

* Dynamic parameter

Body Tracking parameters #

Namespace: body_tracking

common_stereo.yaml

| Parameter | Description | Value |

|---|---|---|

| bt_enabled | True to enable Body Tracking | true, false |

| model | Detection model | string, ‘HUMAN_BODY_FAST’, ‘HUMAN_BODY_MEDIUM’, ‘HUMAN_BODY_ACCURATE’ |

| body_format | Skeleton format | string, ‘BODY_18’,‘BODY_34’,‘BODY_38’,‘BODY_70’ |

| allow_reduced_precision_inference | Allow inference to run at a lower precision to improve runtime and memory usage | true, false |

| max_range | Defines a upper depth range for detections [m] | double |

| body_kp_selection | Defines the body selection outputted by the sdk when retrieveBodies is called | string, ‘FULL’, ‘UPPER_BODY’ |

| enable_body_fitting | Defines if the body fitting will be applied | true, false |

| enable_tracking | Defines if the object detection will track objects across images flow | true, false |

| prediction_timeout_s | During this time [sec], the skeleton will have OK state even if it is not detected. Set this parameter to 0 to disable SDK predictions | double |

| confidence_threshold* | Minimum value of the detection confidence of skeleton key points | double, [0,99] |

| minimum_keypoints_threshold* | Minimum number of skeleton key points to be detected for a valid skeleton | int |

* Dynamic parameter

Body Tracking parameters #

Namespace: body_tracking

common_stereo.yaml

| Parameter | Description | Value |

|---|---|---|

| stream_enabled | Enable the streaming server when the camera is open | true, false |

| codec | Different encoding types for image streaming | string, ‘H264’, ‘H265’ |

| port | Port used for streaming. Port must be an even number. Any odd number will be rejected. | int |

| bitrate | Streaming bitrate (in Kbits/s) used for streaming. | int, [1000 - 60000] |

| gop_size | The GOP size determines the maximum distance between IDR/I-frames. Very high GOP size will result in slightly more efficient compression, especially on static scenes. But latency will increase. | int, [max 256] |

| adaptative_bitrate | Bitrate will be adjusted depending the number of packet dropped during streaming. If activated, the bitrate can vary between [bitrate/4, bitrate]. | true, false |

| chunk_size | Stream buffers are divided into X number of chunks where each chunk is chunk_size bytes long. You can lower chunk_size value if network generates a lot of packet lost: this will generates more chunk for a single image, but each chunk sent will be lighter to avoid inside-chunk corruption. Increasing this value can decrease latency. | int, [1024 - 65000] |

| target_framerate | Framerate for the streaming output. This framerate must be below or equal to the camera framerate. Allowed framerates are 15, 30, 60 or 100 if possible. Any other values will be discarded and camera FPS will be taken. | int |

Debug parameters #

Namespace: debug

common_stereo.yaml

| Parameter | Description | Value |

|---|---|---|

| sdk_verbose | Set the verbose level of the ZED SDK | int, 0 -> disable |

| sdk_verbose_log_file | Path to the file where the ZED SDK will log its messages. If empty, no file will be created. The log level can be set using the sdk_verbose parameter. | string |

| use_pub_timestamps | Use the current ROS time for the message timestamp instead of the camera timestamp. This is useful to test data communication latency. | true, false |

| debug_common | Enable general debug log outputs | true, false |

| debug_sim | Enable simulation debug log outputs | true, false |

| debug_video_depth | Enable video/depth debug log outputs | true, false |

| debug_camera_controls | Enable camera controls debug log outputs | true, false |

| debug_point_cloud | Enable point cloud debug log outputs | true, false |

| debug_positional_tracking | Enable positional tracking debug log outputs | true, false |

| debug_gnss | Enable GNSS fusion debug log outputs | true, false |

| debug_sensors | Enable sensors debug log outputs | true, false |

| debug_mapping | Enable mapping debug log outputs | true, false |

| debug_terrain_mapping | Enable terrain mapping debug log outputs | true, false |

| debug_object_detection | Enable object detection debug log outputs | true, false |

| debug_body_tracking | Enable body tracking debug log outputs | true, false |

| debug_roi | Enable region of interest debug log outputs | true, false |

| debug_streaming | Enable streaming debug log outputs | true, false |

| debug_advanced | Enable advanced debug log outputs | true, false |

| debug_nitros | Enable Nitros debug log outputs | true, false |

| disable_nitros | If available, disable NITROS usage for debugging and testing purposes | true, false |

Dynamic parameters #

The ZED node lets you reconfigure many parameters dynamically during the execution of the node. All the dynamic parameters are indicated with a * in the list above and with the tag [DYNAMIC] in the comments of the YAML files.

You can set the parameters using the CLI command ros2 param set, e.g.:

ros2 param set /zed/zed_node depth.confidence 80

if the parameter is set successfully, you will get a confirmation message:

Set parameter successful

In the case you tried to set a parameter that’s not dynamically reconfigurable, or you specified an invalid value, you will get this type of error:

ros2 param set /zed/zed_node depth.confidence 150

Set parameter failed

and the ZED node will report a warning message explaining the error type:

1538556595.265117561: [zed.zed_node] [WARN] The param 'depth.confidence' requires an INTEGER value in the range ]0,100]

You can also use the Configuration -> Dynamic Reconfigure plugin of the rqt tool to dynamically set parameters by using a graphic interface.

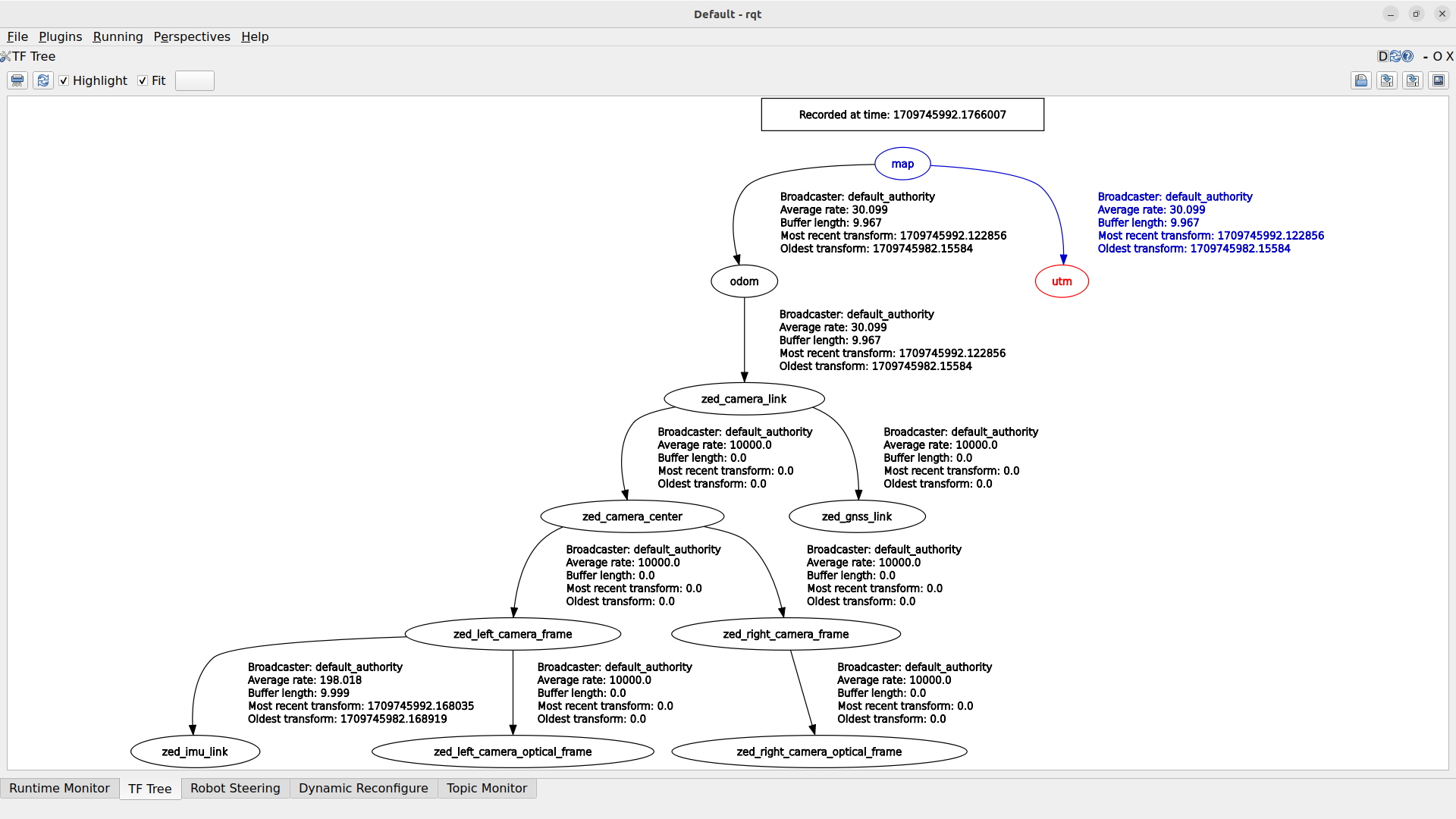

Transform frames #

The ZED ROS 2 wrapper broadcasts multiple coordinate frames that each provides information about the camera’s position and orientation. If needed, the reference frames can be changed in the launch file.

<camera_name>_camera_linkis the current position and orientation of the ZED base center. It corresponds to the bottom central fixing hole.<camera_name>_camera_centeris the current position and orientation of ZED middle baseline, determined by visual odometry and the tracking module.<camera_name>_right_camerais the position and orientation of the ZED’s right camera.<camera_name>_right_camera_opticalis the position and orientation of the ZED’s right camera optical frame.<camera_name>_left_camerais the position and orientation of the ZED’s left camera.<camera_name>_left_camera_opticalis the position and orientation of the ZED’s left camera optical frame.<camera_name>_imu_linkis the origin of the inertial data frame (not available with ZED).<camera_name>_mag_linkis the origin of the magnetometer frame (ZED2/ZED2i only).<camera_name>_baro_linkis the origin of the barometer frame (ZED2/ZED2i only).<camera_name>_temp_left_linkis the origin of the left temperature frame (ZED2/ZED2i only).<camera_name>_temp_right_linkis the origin of the right temperature frame (ZED2/ZED2i only).

For RVIZ 2 compatibilty, the root frame map_frame is called map.

The TF tree generated by the zed_wrapper reflects the standard descripted in REP105.

The odometry frame is updated using only the “visual inertial odometry” information (loop closure is not applied).

The map frame is updated using the Positional Tracking algorithm provided by the Stereolabs SDK, fusing the inertial information from the IMU sensor, and applying loop closure information.

map_frame (`map`)

└─odometry_frame (`odom`)

└─camera_link (`<camera_name>_camera_link`)

└─camera_frame (`<camera_name>_camera_center`)

└─left_camera_frame (`<camera_name>_left_camera_frame`)

| └─left_camera_optical (`<camera_name>_left_camera_optical`)

| └─imu_frame (`<camera_name>_imu_link`)

└─right_camera_frame (`<camera_name>_right_camera_frame`)

└─right_camera_optical (`<camera_name>_right_camera_optical`)

When the Geo Tracking module is enabled the ZED Node TF Tree reflects the following diagram:

Services #

The ZED node provides the following services:

~/reset_odometry: Resets the odometry values eliminating the drift due to the Visual Odometry algorithm. The new odometry value is set to the latest camera pose received from the tracking algorithm.~/reset_pos_tracking: Restarts the Tracking algorithm setting the initial pose to the value available in the param server or to the latest pose set with the serviceset_pose.~/set_pose: Restarts the Tracking algorithm setting the initial pose of the camera to the value passed as vector parameter -> [X, Y, Z, R, P, Y]~/enable_obj_det: enable/disable object detection.~/enable_body_trk: enable/disable body tracking.~/enable_mapping: enable/disable spatial mapping.~/enable_streaming: enable/disable a local streaming server.~/start_svo_rec: Start recording an SVO file. If no filename is provided the default zed.svo is used. If no path is provided with the filename the default recording folder is ~/.ros/~/stop_svo_rec: Stop an active SVO recording.~/toggle_svo_pause: set/reset SVO playing pause. Note: Only available ifgeneral.svo_realtimeis false and if an SVO as been chosen as input source.~/set_svo_frame: Set the SVO playback frame to the specified value.~/set_roi: Set the Region of Interest to the described polygon.~/reset_roi: Reset the Region of Interest to the full image frame.~/toLL: converts frommapcoordinate frame to Latitude/Longitude Note: Only available if GNSS fusion is enabled~/fromLL: converts from Latitude/Longitude tomapcoordinate frame Note: Only available if GNSS fusion is enabled

Services can be called using the rqt graphical tool by enabling the plugin Plugins -> Services -> Service caller.

It is possible to call a service also by using the CLI command ros2 service call.

For example to start Object Detection for the ZED2 camera:

ros2 service call /zed/zed_node/enable_obj_det std_srvs/srv/SetBool data:\ true\

and the service server will reply:

waiting for service to become available...

requester: making request: std_srvs.srv.SetBool_Request(data=True)

response:

std_srvs.srv.SetBool_Response(success=True, message='Object Detection started')

Assigning a GPU to a camera #

To improve performance, you can specify the gpu_id of the graphic card that will be used for the depth computation in the common_stereo.yaml configuration file. The default value (-1) will select the GPU with the highest number of CUDA cores. When using multiple ZEDs, you can assign each camera to a GPU to increase performance.

Node Diagnostic #

The ZED ROS 2 node publishes useful diagnostic information aggregated into the /diagnostics topic using the diagnostic_updater package.

Diagnostic information can be analyzed and parsed by using ROS 2 tools, like for example the Runtime Monitor plugin of rqt.

Available information:

- Uptime

- Grab frequency and processing time

- Frame drop rate

- Input mode

- Video and Depth publishing rate and processing time

- Depth Mode

- Point Cloud publishing rate and processing time

- GNSS Fusion status

- Odometry and Localization status

- TF broadcasting rate

- Object Detection status and publishing rate

- Body Tracking status and publishing rate

- IMU TF broadcasting rate

- Sensor data publishing rate

- Camera Internal temperatures

- SVO playback status

- SVO recording status

- Streaming server status